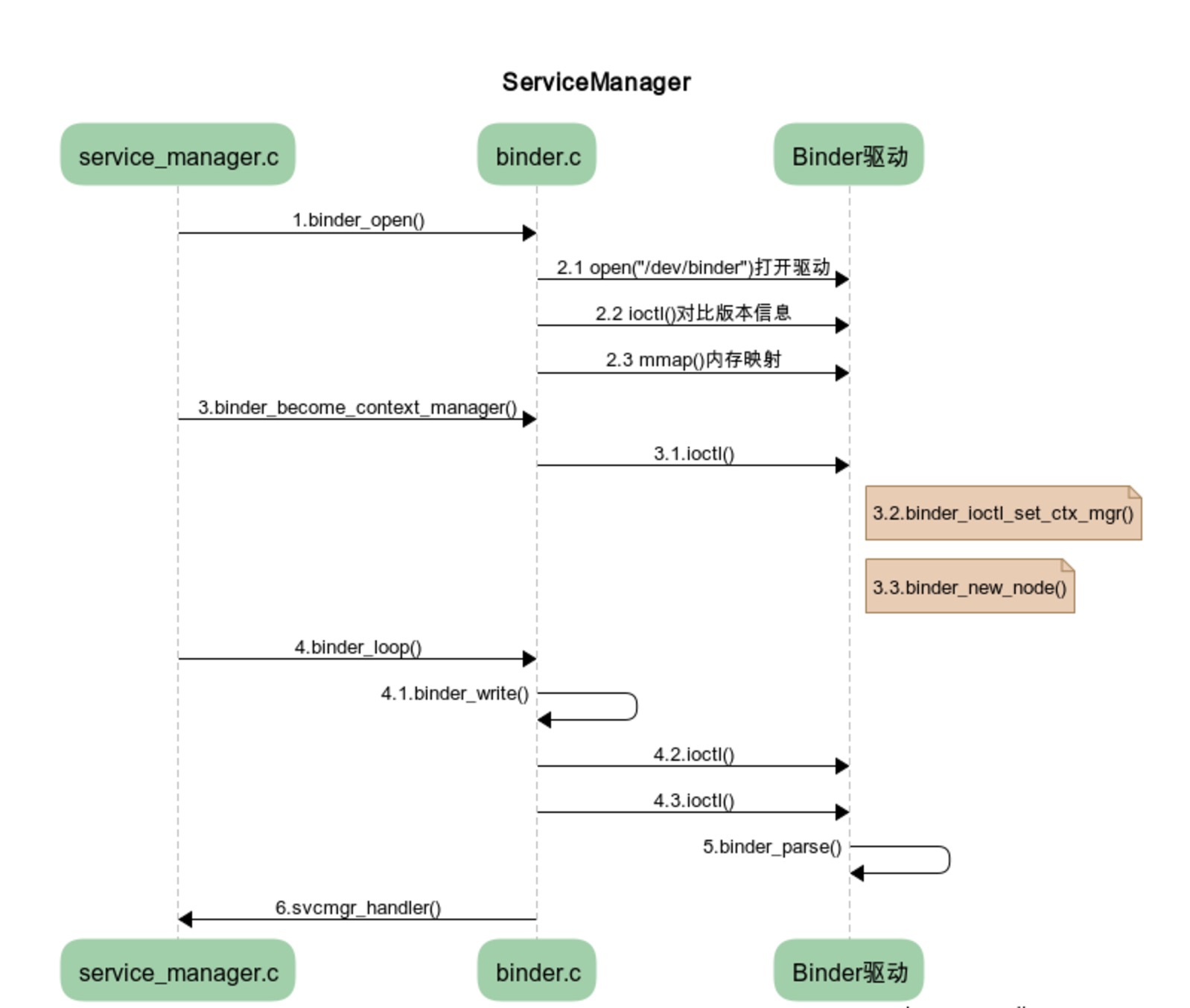

纵观Binder通讯过程,无不在跟ServiceManager打交道,了解ServiceManager 交互流程就显得很有必要了。ServiceManager分为启动和、获取、添加、注册服务。首先从启动过程来了解,ServiceManager如何成为Binder守护进程。

|

|

1 启动ServiceManager

- 1.Android在init进程启动以后,通过脚本init.rc,启动ServiceManager:

|– init.rc

对应执行程序/system/bin/servicemanager,源码service_manager.c。下一步追踪一下service_manager.c的main()入口程序。

2.1 main

|– service_manager.c

|

|

- mian 入口函数一共处理了三件事:

- 1.打开Binder驱动并申请内存

- 2.告诉Binder驱动成为Binder的上下文管理者(ServiceManager成为守护进程)

- 3.开启循环,处理IPC请求(等待Client请求)

2.2 binder_open

在ServiceManaer 的main入口第一步看到打开Binder驱动,调用了binder_open。binder_open又做了哪些具体的工作呢?

|– binder.c

|

|

- 打开Binder驱动相关操作:

- 初始化状态参数 binder_state

- 通过open打开Binder驱动

- 通过ioctl()校验内核空间Binder与用户空间Binder是否版本一致

- 映射mmap内存

2.3 binder_become_context_manager

- 在ServiceManaer 的main入口第一步看到打开Binder驱动,调用了binder_open。随后执行了binder_become_context_manager。使Binder成为上下文的管理者,具体的操作解读一下源码来看看

|– binder.c

|

|

- 1.这里直接调用系统ioctl()方法。由于没找到kernel/drivers/android/binder.c源码文件。这里主要梳理一下调用逻辑好了

- ioctl() 后会回调binder_ioctl(),根据BINDER_SET_CONTEXT_MGR参数,最终调用binder_ioctl_set_ctx_mgr(),过程中会持有binder_main_lock。

- 3.binder_ioctl_set_ctx_mgr中首先保证只创建一次mgr_node对象,并将当前线程euid作为Service Manager的uid。在最后通过binder_new_node创建ServiceManager类

- 4.binder_new_node 中创建binder_node给新创建对象分配内存空间,同时将新创建的node对象添加到proc红黑树;最后init两个队列:async_todo和binder_work。

2.4 binder_loop

在执行了binder_become_context_manager之后,调用了binder_loop开启循环,处理IPC请求。查看其源码:

|

|

主要操作:

- binder_write通过ioctl()将BC_ENTER_LOOPER命令发送给binder驱动,ServiceManager进入循环

- 进入循环,不断读写

- 读写需要进行 binder_parse 解析

2.4.1 binder_write

- binder_loop 中将BC_ENTER_LOOPER命令发送给binder驱动,ServiceManager进入循环

|– binder.c

|

|

- 这里其实将传递过来的BC_ENTER_LOOPER通过ioctl()调用回调给binder_ioctl

2.4.2 binder_ioctl

- binder_ioctl中通过binder_ioctl_write_read将用户空间的binder_write_read结构体拷贝到内核空间.

- 如果层次是有缓存数据,通过binder_thread_write,从bwr.write_buffer拿出cmd数据,设置线程的looper状态为BINDER_LOOPER_STATE_ENTERED

2.5 binder_parse

- 在循环读写过程中,对相应的binder进行解析,查看binder_parse源码:

|

|

- 参考ServiceManager中开启循环时的调用, binder_loop(bs, svcmgr_handler)

- ptr 指向BC_ENTER_LOOPER,func指向svcmgr_handler;

2.6 svcmgr_handler

|– service_manager.c

继续查看ServiceManager中的svcmgr_handler源码:

|

|

- 该方法提供了查询服务,添加注册服务,列举服务list功能。

总结

ServiceManager通过init.rc脚本启动成为Android 进程间通信机制Binder的守护进程的过程:

- 1.打开Binder驱动 /dev/binder文件:open(“/dev/binder”, O_RDWR)

- 2.申请128k内存随后并建立映射: mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0)

- 3.告诉Binder驱动成为Binder的上下文管理者(ServiceManager成为其守护进程):binder_become_context_manager(struct binder_state *bs)

- 4.开启循环,处理IPC请求(等待Client请求):binder_loop(bs, svcmgr_handler);